Data Models Gone Bad Can Spoil Your Predictions

February 22, 2022

Artificial Intelligence and Machine Learning Require Ongoing Tuning

We recently discussed how important it is to choose the correct set of data from which to drive predictions and inferences. It’s also important that the data not be skewed in any particular direction— or else it will generate skewed predictions. In this post, we discuss the number-crunching processes downstream from data acquisition.A data model represents what was learned by a machine learning algorithm. As data flows downstream through your various business processes, the data models supporting your algorithms that direct your systems or people can fall out of alignment. This occurs when the premises and assumptions surrounding the data models change enough to erode the accuracy of the analytics. Or…the data models take the algorithms out of meta-stable equilibrium and into turbulent predictive behavior.

It’s not a good thing when data models have gone bad, spoiling your predictions and inferences!

Artificial Intelligence and Machine Learning: Not Set and Forget Tools

Understanding why this happens requires realizing that machine learning algorithms and artificial intelligence are not “set and forget” tools. Like any other software system, they require tuning to maintain optimal performance. Also, it is important to understand the boundaries of the data values going into the algorithms so you aren’t pushing them out of their “flight envelope.”Tuning includes the normal maintenance engineering tasks you would apply to any software deployment—upgrades, patches, and adaptations—in reaction to infrastructure changes. As you maintain, be sure to validate the algorithms are still “in tune” and that data feeds adhere to the original assumptions and conditions.

The process is similar to what Formula One race car drivers go through. They know the techniques and wisdom (data model) gathered about the nuances of one track do not apply to another track. They also contend with races that require a change to the car type—Indy, NASCAR, or an off-road vehicle; all these things impact their driving technique. So as premises and assumptions change from race-to-race and from car-to-car, drivers adapt their internal algorithms that direct them on how to best navigate each track.

Factors Causing Data Models to Spin-out

Here’s a rundown of various factors that can cause data models to derail:- Concept Drift occurs due to statistical changes in the interrelationships of interdependent variables. For instance, economic <If this, then that> statements can change due to differences in unemployment. This has happened during Covid with a record number of people simply resigning and dropping out of searching for a job—mainly because they consider the risks of their job to be unacceptable, plus government subsidies are more economically beneficial than actual work.

- Spec Creep (also known as Changing Requirements and related to Concept Drift) happens when a data science team designs a model to perform a focused task, but another internal team (such as marketing) decides to modify the model’s capabilities. Perhaps there was a change in how the marketing team manages product promotion campaigns. The accuracy of the outputs is affected by changes in functional requirements rather than by causal changes in data.

- User Persona Change is another type of Spec Creep that takes place because of a change in the user base. New users representing a different target segment might require new sources of data to draw similar inferences as previous target segments. For example, as people from a younger generation enter an older age range that an algorithm was tuned for, they bring different behavioral characteristics that might require a completely different set of data to base predictions upon.

- Data Drift can dramatically change the accuracy of algorithms when the data models are trained on data from a different era or different environmental situation. Take irrigation algorithms…just because they worked for a specific geography with a particular economic set point in the past, they may not do so in the future due to changes in the climate, economic considerations, water pricing, or usage regulations.

- Feature Drift relates to changes associated with machine learning model inputs, which are not static; change is inevitable, and the models depend heavily on the data they point to. Some models can handle minor input changes, but as distributions divert from what the model saw in training, performance on the task will degrade.

- Prediction Drift is a change in the actuals within a production model, which decays over time. Monitoring for prediction drift allows you to measure model quality. As actuals change, models that make predictions can be right one day and wrong the next, which causes a regression in model performance.

Tips for Keeping Analytic Algorithms Healthy

Keeping the algorithms that drive your analytics healthy and clear is vital to the mission of converting raw data into information that enables your end-users to augment their decisions on how to make smarter products and how to run the business more efficiently. Here’s a set of high-level tips on how to deal with the factors that cause data models supporting your algorithms to fall out of alignment:- Develop and maintain a static model of your algorithms as a baseline for future comparisons.

- Always test the edge cases and watch for sudden changes in model behavior.

- Set up a recurring schedule to retrain and update the models.

- Implement processes to detect changes to concepts, specs, user personas, data, features and predictions.

- Measure the changes you discover and prioritize the need to react; not all changes will warrant an adjustment to your models.

- Create new models as necessary to resolve any changes.

Moving Up Maslow’s Pyramid

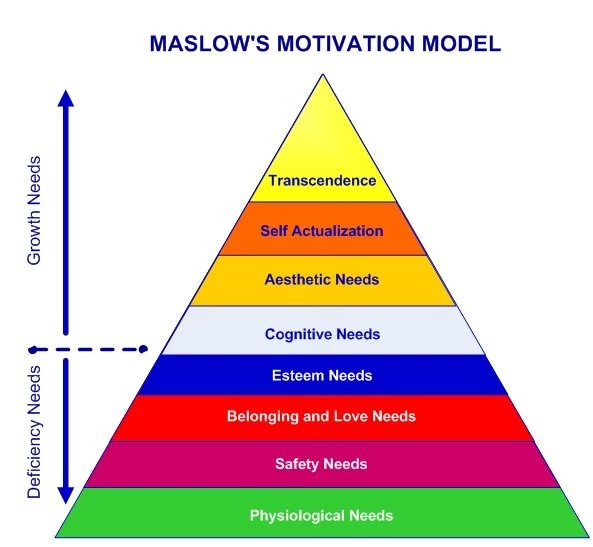

In this article, the process of monitoring and adapting algorithms maps to the middle part of Maslow’s pyramid—cognitive needs. Abraham Maslow was an American psychologist who developed a hierarchy of needs to explain human motivation as shown below:

In our next post, we’ll discuss bringing your data analysis up to the top of the pyramid—actualization and transcendence. Once you know your algorithms align with the results you are looking for in relation to the data you are crunching, you want to make sure the resulting inferences are actionable. That’s when you gain the ability to augment the decision-making of your end-users so they can make smarter products and more efficient business processes.

We’d be pleased to work with you on your next data analysis project. Contact 3Pillar today to learn how we can help.

About the author

BY

SHARE

Recent blog posts

Stay in Touch

Keep your competitive edge – subscribe to our newsletter for updates on emerging software engineering, data and AI, and cloud technology trends.