The Keys to Leading Innovation, with Greg Brandeau

The keys to leading innovation successfully are the topics of discussion on the latest episode of “The Innovation Engine” podcast. We take a look at the importance of building a supportive community and psychological safety in creating an environment that rewards risk-taking, why it’s more important that executives be ringleaders rather than visionaries, and the importance of creative abrasion, creative agility, and creative resolution to the innovation process.

Greg Brandeau joins us on this week’s episode to discuss those topics and more. Greg is co-author of Collective Genius: The Art and Practice of Leading Innovation, which he wrote along with Dr. Linda Hill of the Harvard Business School, Emily Truelove, and Kent Lineback. Collective Genius was named one of “The 20 Best Business Books to Read this Summer” by Business Insider. It shared this distinction with a number of other notable books published in 2014, including Flash Boys by Michael Lewis, Think Like a Freak, and books by Twitter founder Biz Stone and LinkedIn founder Reid Hoffman. If you’re interested in learning more about the book, please visit its home online at https://collectivegeniusbook.com.

Greg is the Former COO and President of Maker Media, and he’s also the former CTO of The Walt Disney Studios. He spent the better part of a decade as an executive at Pixar before the company was acquired by Disney, and he started his career at NeXT. Greg has spent his career both observing and as a part of high-performing, highly innovative teams.

Among the highlights of this week’s episode:

- Greg tells the story of his first encounter with Steve Jobs during his final 10-minute interview at NeXT and the test the Apple co-founder gave Greg to see whether he would challenge Jobs’ ideas

- We talk about how Bill Coughran, a SVP of Engineering at Google, led innovation when managing 2 different teams of engineers trying to solve a highly complex problem in different ways

- “It’s not whose idea is right but what idea is right,” Greg says, and he shares the Steve Jobs axiom that “It’s more important to be successful than it is to be right”

- We talk about the framework that Collective Genius lays out for building organizations with innovation embedded in their DNA

- Greg shares a story from his days at Pixar that illustrates the importance of “psychological safety,” when a year’s worth of work on Toy Story 2 was erased, as were all the backups save for one. Watch the video embedded below for an animated version of how Toy Story 2 was almost lost by an RM* command, then saved by the film’s technical director being on maternity leave.

Listen to the Episode

Interested in hearing more? Tune in to the full episode of “The Innovation Engine” podcast below.

About The Innovation Engine

Since 2014, 3Pillar has published The Innovation Engine, a podcast that sees a wide range of innovation experts come on to discuss topics that include technology, leadership, and company culture. You can download and subscribe to The Innovation Engine on Apple Podcasts. You can also tune in via the podcast’s home on Spotify to listen online, via Android or iOS, or on any device supporting a mobile browser.

Product Design in Cybersecurity, with Jason Cyr of Cisco (Part 1)

How does Cisco produce one of the best-integrated cybersecurity platforms in the world? Jason Cyr, VP and Head of Product Design at Cisco, joins The Innovation Engine to talk with 3Pillar Field CTO Lance Mohring about how design is deeply embedded in Cisco’s product development process.

Jason walks us through the market and organizational context that shapes Cisco’s unified product design process, which frequently involves incorporating new products to the overall product mix in the wake of an acquisition. He then gives some specific details on how product design can improve cybersecurity by preventing everyday configuration errors, while also anticipating zero-day vulnerabilities.

Jason outlines the Cisco product, design, and engineering team’s unique GOAT process, which stands for Give Outcomes A Try and is influenced by Josh Seiden’s book Outcomes Over Output. The GOAT process allows product managers, engineers, and designers to identify and align on end-user outcomes before the iterative development process even begins.

Our conversation is so meaty we had to break it up into two parts. Be sure to stay tuned for Part 2 next week, where we’ll dive into how Jason anticipates AI will impact product design in the near future and much more.

Listen to the Episode

Listen to this episode of The Innovation Engine on Apple Podcasts, Spotify, or via the embed below.

Episode Highlights

- Jason gives a firsthand account of the modern history of cybersecurity product design

- Jason explains how product design can reduce cyberattacks

- The story of how the Cisco team created their Give Outcomes a Try (GOAT) process and the impact it has had

Resources

- Connect with Jason Cyr on LinkedIn

- Connect with Lance Mohring on LinkedIn

- Check out Jason’s blogs on Medium

- Read “Outcomes Over Output” by Josh Seiden

- Listen to Ep. 197 of The Innovation Engine: How to Drive Outcomes Over Outputs, with Josh Seiden

Data Integration: More Than the Sum of Its Parts

Every day, office workers problem solve, often using disparate systems that need to communicate with each other to accomplish the goal at hand. No industry is immune from these operational headaches and inefficiencies. It’s often the job and responsibility of internal teams to interface between archaic and disconnected systems and databases.

This lack of modernization and foresight comes at a cost. According to the Harvard Business Review, some teams lose up to 60% of their working day on actions like extracting data from multiple systems only to enter it into another. This lost productivity contributes to “disconnection debt.” When you dig deeper, these are costs companies incur from lackluster results and missing the mark on key performance indicators.

The Challenge: Disconnection Debt

This operational nightmare hit home for our team at 3Pillar. At one point, different departments were using six separate business systems (Salesforce, Workday, OpenAir, Lever, Netsuite, and Engage) to meet their functional and operational needs. Each system captured specific information related to their work and generated data in different formats.

This workflow resulted in the formation of data silos, adversely affecting the efficiency and accuracy of our reporting process. Generating reports based on the information from different sources has historically been a tedious task to accomplish.

The Solution: An Integrated Approach

In contrast, 3Pillar’s leadership team envisioned a more expansive approach. The idea was to develop a centralized data platform designed to function as the singular source of truth for all reporting requirements throughout the organization. This move would set up a foundation to integrate data from all six systems, eliminating manual processes associated with Key Performance Indicator (KPI) generation.

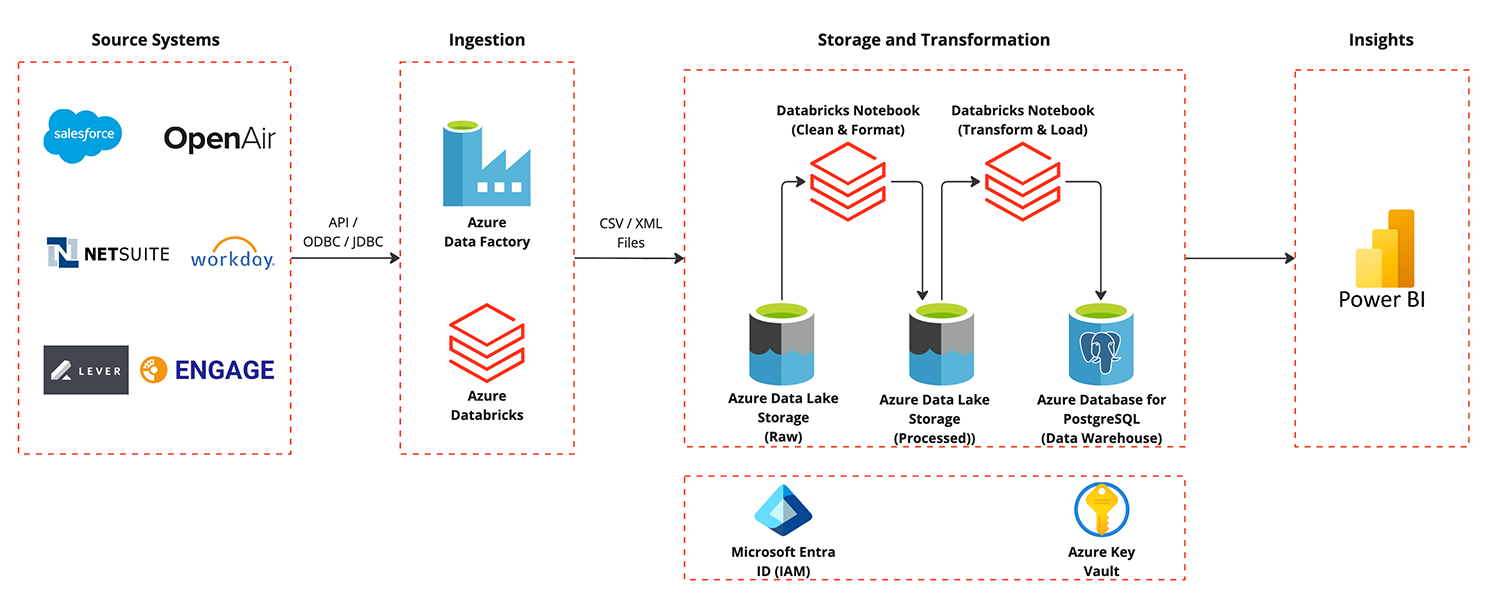

Following 6 months of active development, we designed and implemented a cloud-based data platform that seamlessly ingests, transforms, and stores data coming from the six separate systems through a daily batch processing system.

Here’s a look at some of the foundational elements of this solution:

Data Ingestion: Our team leveraged Azure Data Factory to ingest data from NetSuite and Azure Databricks to ingest data from five other sources. The ingestion process is an incremental loading process and is based on a field such as “last execution date,” to ensure efficient data retrieval and processing.

Storage: Data is stored initially in ADLS Gen2 Raw Container, ensuring secure AES 256-bit encryption by default.

Data Transformation: The Azure Databricks pipeline performs data cleansing and formatting tasks, subsequently transferring the processed data to the ADLS Gen2 Processed Container.

Data Loading: Tables are classified into two categories: those that maintain data change history and those that do not. Our team used Azure Databricks to manage data change history using Slowly Changing Dimension Type-2 and to perform additional transformations prior to loading into Azure PostgreSQL DB.

Insights Generation: Power BI enhances reporting reliability and efficiency by seamlessly integrating with Azure PostgreSQL DB. This synergy means swift and precise data accessibility.

Security: All stages prioritize security with Azure’s encryption standards and access controls, managed through Azure IAM integration. Azure Key Vault serves as a centralized repository for securely storing and managing sensitive credentials, including Azure Storage Account keys, passwords for the Data Warehouse (DWH), and source system access keys.

Governance: Role-based Access Control (RBAC) in the Data Warehouse (DWH) regulates data access with granular permissions based on user roles and responsibilities. Also, IP whitelisting in server firewalls is required to access the data in Data Warehouse (DWH).

The Outcome: Bring Value to Your Business

On the surface, this cloud-based data platform made it possible to access data from multiple sources without any lag, support business processes, and provide relevant and real-time insights to inform decision makers. Since implementation, we’ve reported the following qualitative and quantitative results:

- Centralized Data Integration: Consolidating data from six disparate systems into a single, reliable source of truth eliminates data silos and ensures consistency.

- Efficient Reporting: Power BI, when employed with Azure PostgreSQL DB, makes for streamlined reporting and quick and accurate data access. This reduces manual effort of data preparation and shifts complex calculations to Azure PostgreSQL DB. Ultimately, this eliminates the need for additional joins that would have been required if different data sources like Excel and CRMs are directly connected into Power BI.

- Enhanced Data Quality: Azure Databricks pipelines ensure data cleansing and formatting, improving data accuracy and consistency across reports and analyses.

- Cost Efficiency: Cloud-based storage and automated data processes reduce operational costs compared to multiple manual processes and systems. Using the data platform, our team reported that development and refresh time were reduced by almost 40%. That, in turn, reduced the operational costs. It also opens up the possibility to build more complex cross-system reports in half of the time required.

- Robust Security: Azure’s encryption standards, Role-based Access Control (RBAC), and Azure Key Vault for secure data handling make it possible to meet compliance and governance requirements effectively.

3Pillar: Where strategy meets execution

Overcoming these challenges means an opportunity in that we can help other organizations achieve similar results. However, finding a data integration partner is about more than outsourcing. It’s about truly gaining a strategic partner that ensures your investment of time and resources translates to bottom-line results. It’s high time to find efficiencies at scale through integration.

Ready to get started? Talk to the 3Pillar Global team today.

Your First 90 Days as a Product Leader

Your First 90 Days as a Product Leader