Data Engineering

Unleash the possibilities of your data

Derive actionable intelligence

Advanced data infrastructure, including data lakes, warehouses, streaming, and secure IoT and AI models can generate valuable business insights.

Boost organizational nimbleness

Rapidly respond to market changes with reliable, scalable data pipelines and real-time analytics.

Accelerate revenue growth and monetization

The right data engineering approach can help create new revenue streams for companies that are sitting on valuable proprietary data and information.

Get to Know Data Engineering 2.0

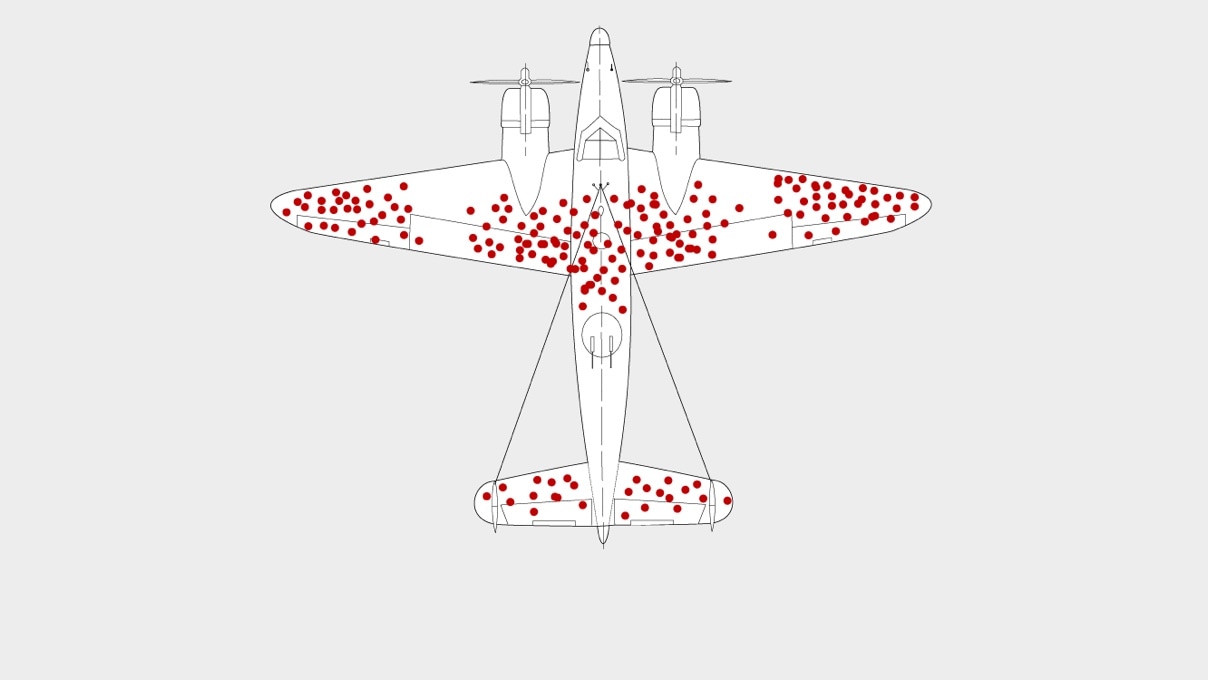

Data Engineering is one of the most rapidly evolving areas of business technology. It’s moving so fast that we’ve reached a point where it requires a new approach – one we’re calling Data Engineering 2.0. This evolution and the approach it demands owe largely to the proliferation of AI and its reliance on data to drive relevant, valuable outputs. However, before you can transform your business with AI, you need to ensure your data is accurate, accessible, and actionable.

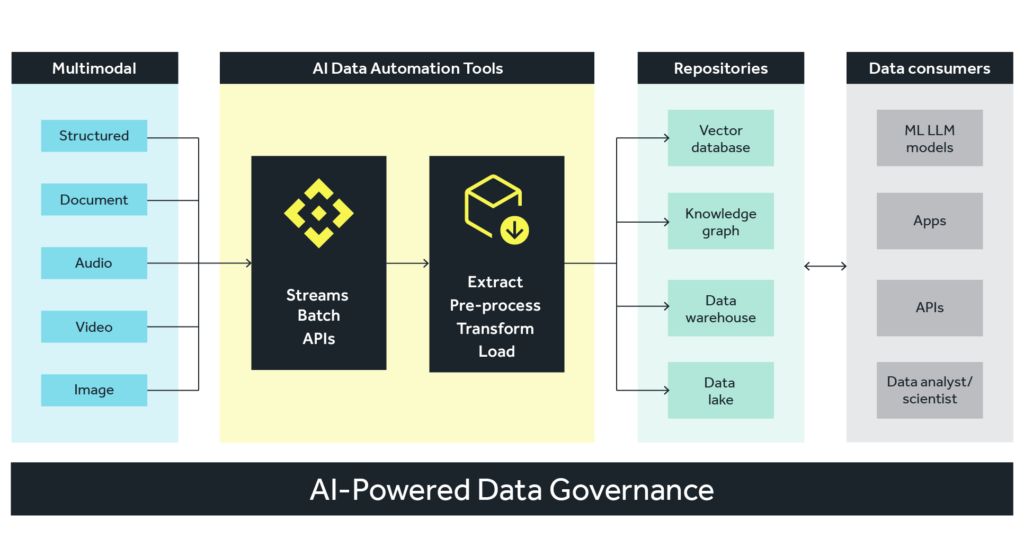

If Data Engineering 1.0 was all about meeting the needs of BI and data analytics, Data Engineering 2.0 is about speed and readiness for multimodal AI application development. Interestingly, when it comes to the intersection of data and AI, this evolution of data engineering presents a duality. Organizations must prepare their data to get the most out of AI applications on one hand. But they must also begin to leverage AI-driven data engineering to cleanse, ingest, process and store their data in advance of developing those AI applications.

Our Data Engineering teams excel in collaborating with organizations on all types of data initiatives, ensuring they can consistently and scalably extract value from their data. This work across the data governance realm includes refining and optimizing data sources, data ingestion, data processing, and data storage.

Data Engineering Components

Collecting and integrating data from diverse sources

Data sources for modern enterprises will include apps and APIs, databases, IoT data, and events like user interactions, transactions, or system logs. As multimodal AI proliferates, companies must ensure they’re prepared to leverage all of those sources, plus a host of external data sources, and many different types of data, including images, audio, video, and other unstructured data.

Refining data ingestion to account for different types

Data ingestion is another key component of Data Engineering 2.0. We help clients establish the right mix of connectors, ETL, data pipelines, orchestration tools, and real-time streams. ETL technologies that we can implement include AWS Kinesis, AWS Lambda, Athena, AWS Glue, Kafka, Microsoft SQL Server Integration Services (SSIS), and Amazon EMR.

Improving data processing while minimizing costs

Processing large data sets is necessary to get the most out of your data, yet the costs can quickly add up if you don’t adopt a strategic approach. We can advise on tools and techniques in data processing areas including real-time data processing, data quality management, scalable infrastructure, and automated data workflows including data pipelines.

Optimizing data storage for scalability and performance

From data lakes and data warehouses to databases and CDNs, our teams know the ins and outs of your data storage needs. Our experts have leveraged all the leading cloud storage technologies for our clients including Amazon DynamoDB, DocumentDB, Redshift, Lake Formation, and S3, Databricks, and Snowflake.

Helping our clients succeed

50% reduction in spend on cloud storage

“3Pillar is a true, reliable partner. The work we have done together has had a real impact on our scalability and on the cost of running our business.”

Alina Motienko

Senior Director, Data Analysis, Comscore

Efficiency. Accuracy. Agility.

Enhance your Data Engineering approach, and reap the rewards of Data Engineering 2.0. Reach out today to get started.

Let’s Talk